InSight

Enabling Seamless Gaze-Driven Interactions in Smart Environments

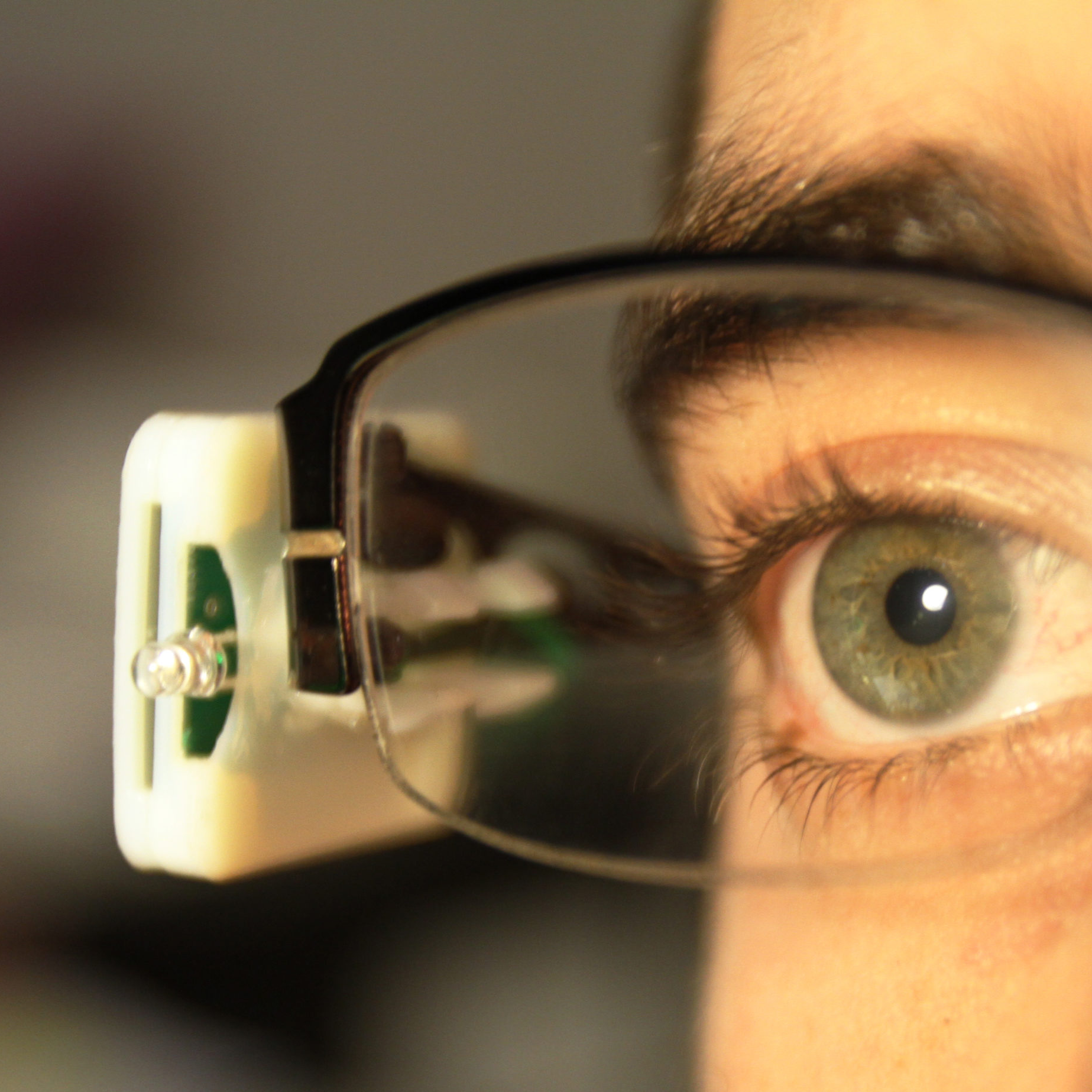

The proliferation of smart devices can lead to complex control schemes, requiring many dedicated input devices. InSight aims to simplify this, allowing control of smart devices through the intuitive and natural act of looking at them. "How would objects act if they knew we were looking at them?"InSight employs custom-built devices for intuitive interaction. The "G-Ripple," worn by the user, emits a precise infrared beam that encodes user information. "G-Sens" devices, attached to smart objects, detect this "G-Ripple" beam and relay the user information to a central hub. This hub combines the gaze data with other input sources (e.g., a mouse) to determine the intended target device and proactively map input commands for seamless control.

InSight features custom "G-Ripple" and "G-Sens" hardware with low-power IR components and microcontrollers. A ZigBee mesh network ensures robust, scalable communication throughout the home. The central hub houses the software intelligence, including an ML algorithm (decision tree) that uses gaze data, smart object information, and other inputs to infer user intent in real-time.

At the intersection of Internet of Things (IoT) and Ubiquitous Computing, inSight reimagines interaction with connected devices, offering a seamless and intuitive experience. Its gaze-based control holds particular promise for individuals with limited mobility, potentially increasing independence and improving quality of life. InSight was published in the Augmented Human International Conference, and recognized with the Best Short Paper Award. InSight hints at a future where technology seamlessly anticipates our needs and responds organically.

- Software Engineering

- Hardware System Design

- Rapid Prototyping

- Machine Learning

- Project Management

- C

- C++

- C#

- Unity 3D

- Unreal Engine

- Android

- Python

- Java

- Kotlin

- Assembly

- HDL

- Embedded Linux

- Altium Designer

- LTspice

- Cadence OrCAD

- Adobe Illustrator

- Adobe Photoshop

- Adobe Premiere Pro

- Figma